flynn.gg

Christopher Flynn

Machine Learning

Systems Architect,

PhD Mathematician

Projects

Open Source

Blog

Résumé

GitHub

Blog

Language Agnostic Airflow on Kubernetes

One of my current data engineering initiatives at SimpleBet is to centralize and productionize ETL processes and data. Internally, we have sports data we’ve gathered from various sources. The structure of the data varies wildly from source to source, and the data requirements differ greatly between engineering and data science applications. These requirements include providing both normalized and denormalized datasets across the organization with additional optimizations for various downstream use cases.

To accomplish this, we would need an ETL tool. Nowadays, Apache Airflow, a Python application, is the industry standard for managing these types of workflows. We use python a lot for machine learning research, but most of our engineers work in Elixir and Rust.

I wanted to consider a platform tool which was language agnostic. My experience in the past resulted in heavy use of Airflow’s PythonOperator, and custom built operators for ECS Fargate and Databricks jobs. More recently, however, version 1.10 of Airflow includes integrations with Kubernetes which offer an elegant solution.

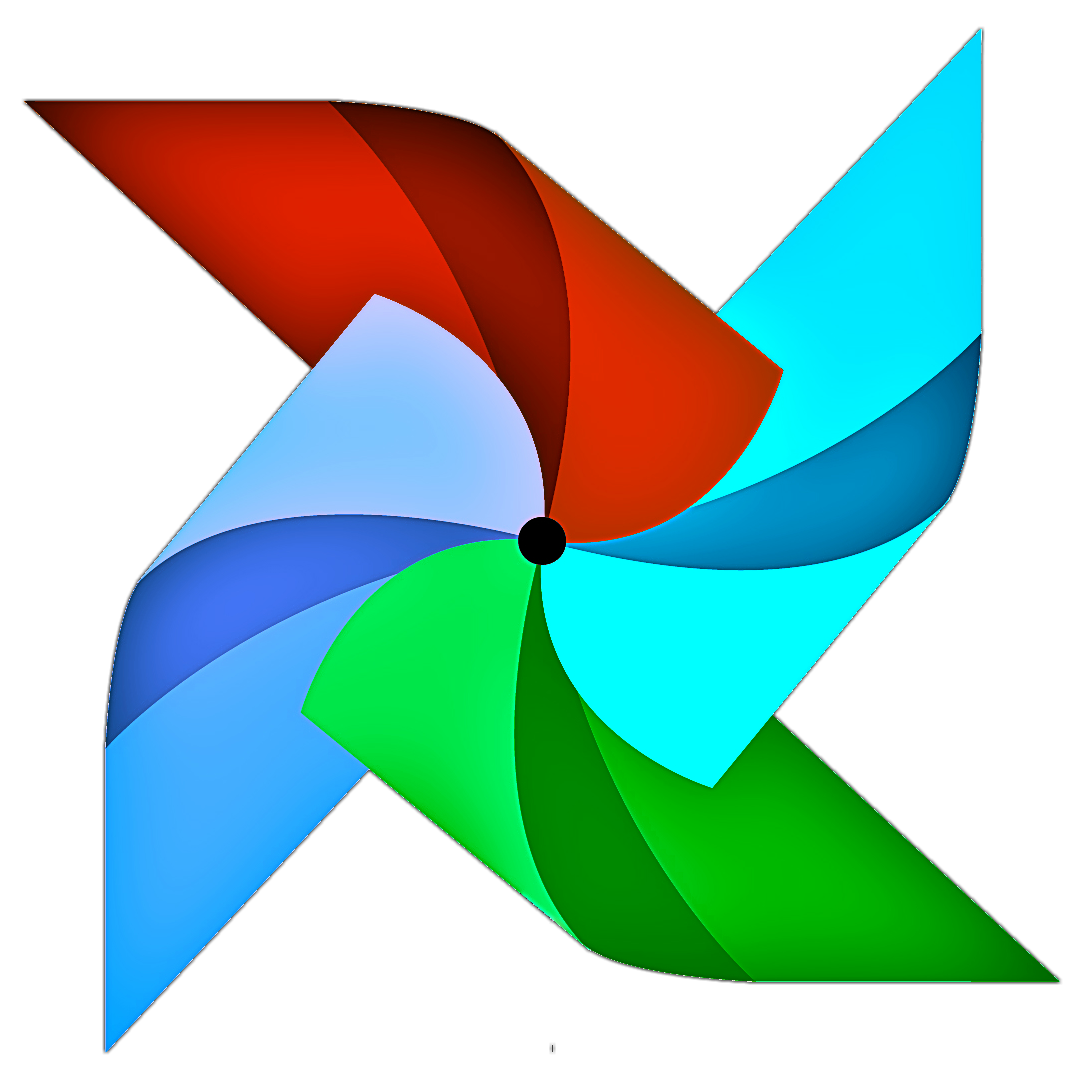

These upgrades to Airflow allow the use of a KubernetesExecutor in lieu of the previously standard CeleryExecutor. The KubernetesExecutor allows the scheduler to spawn Airflow containers as worker pods in Kubernetes clusters in which these workers handle tasks from associated Operators. This eliminates the need for a distributed pool of Celery workers and even a message broker like RabbitMQ or Redis to maintain a task queue. Workers and tasks are both enabled to exist ephemerally within the cluster.

There also now exists the KubernetesPodOperator, an Airflow operator for launching pods in a Kubernetes cluster which contain code for running the tasks. This allows for launching arbitrary Docker containers, which immediately offers an abstraction away from Python for task execution logic.

With the KubernetesPodOperator, we can package Elixir tasks and Python tasks in separate Docker containers, which are then spawned ephemerally by KubernetesExecutor workers. The operator is configured to terminate pods on completion and to collect and store all output logs from those task containers so they can be viewed in the Airflow webserver UI. The logs are stored in a shared PersistentVolume for access by the webserver, scheduler, and workers. Above is a diagram which loosely illustrates this architecture.

Given the object oriented nature of Airflow Operators, we can also subclass the KubernetesPodOperator into language specific PodOperators, which further abstracts away and unifies a lot of the common Kubernetes configuration required in defining tasks. This results in much simpler DAG definitions with less boilerplate configuration.

Further reading

Kubernetes

Apache Airflow

Languages

Message Queues, Distributed Workers, RDBMS